python 爬蟲請求模塊requests詳解

相比urllib,第三方庫requests更加簡單人性化,是爬蟲工作中常用的庫

requests安裝初級爬蟲的開始主要是使用requests模塊安裝requests模塊:Windows系統:cmd中:

pip install requests

mac系統中:終端中:

pip3 install requestsrequests庫的基本使用

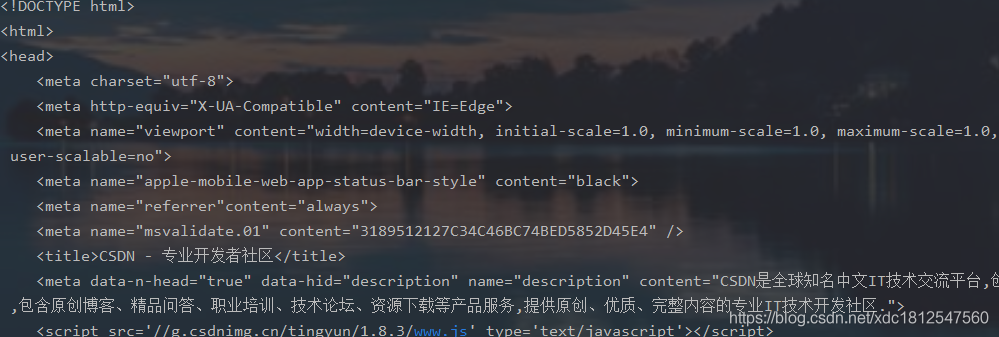

import requestsurl = ’https://www.csdn.net/’reponse = requests.get(url)#返回unicode格式的數據(str) print(reponse.text)

response.text 返回unicode格式的數據(str)response.content 返回字節流數據(⼆進制)response.content.decode(‘utf-8’) ⼿動進⾏解碼response.url 返回urlresponse.encode() = ‘編碼’

狀態碼response.status_code: 檢查響應的狀態碼

例如:200 : 請求成功301 : 永久重定向302 : 臨時重定向403 : 服務器拒絕請求404 : 請求失敗(服務器⽆法根據客戶端的請求找到資源(⽹⻚))500 : 服務器內部請求

# 導入requestsimport requests# 調用requests中的get()方法來向服務器發送請求,括號內的url參數就是我們# 需要訪問的網址,然后將獲取到的響應通過變量response保存起來url = ’https://www.csdn.net/’ # csdn官網鏈接鏈接response = requests.get(url)print(response.status_code) # response.status_code: 檢查響應的狀態碼

200

請求⽅式requests的幾種請求方式:

p = requests.get(url)p = requests.post(url)p = requests.put(url,data={’key’:’value’})p = requests.delete(url)p = requests.head(url)p = requests.options(url)GET請求

HTTP默認的請求方法就是GET* 沒有請求體* 數據必須在1K之內!* GET請求數據會暴露在瀏覽器的地址欄中

GET請求常用的操作:1. 在瀏覽器的地址欄中直接給出URL,那么就一定是GET請求2. 點擊頁面上的超鏈接也一定是GET請求3. 提交表單時,表單默認使用GET請求,但可以設置為POST

POST請求(1). 數據不會出現在地址欄中(2). 數據的大小沒有上限(3). 有請求體(4). 請求體中如果存在中文,會使用URL編碼!

requests.post()用法與requests.get()完全一致,特殊的是requests.post()有一個data參數,用來存放請求體數據

請求頭當我們打開一個網頁時,瀏覽器要向網站服務器發送一個HTTP請求頭,然后網站服務器根據HTTP請求頭的內容生成當此請求的內容發送給服務器。我們可以手動設定請求頭的內容:

import requestsheader = { ’User-Agent’:’Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.88 Safari/537.36’}url = ’https://www.csdn.net/’reponse = requests.get(url,headers=header)#打印文本形式print(reponse.text)requests設置代理

使⽤requests添加代理只需要在請求⽅法中(get/post)傳遞proxies參數就可以了

cookiecookie :通過在客戶端記錄的信息確定⽤戶身份

HTTP是⼀種⽆連接協議,客戶端和服務器交互僅僅限于 請求/響應過程,結束后 斷開,下⼀次請求時,服務器會認為是⼀個新的客戶端,為了維護他們之間的連接, 讓服務器知道這是前⼀個⽤戶發起的請求,必須在⼀個地⽅保存客戶端信息。

requests操作Cookies很簡單,只需要指定cookies參數即可

import requests#這段cookies是從CSDN官網控制臺中復制的header = { ’User-Agent’:’Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.88 Safari/537.36’, ’cookie’: ’uuid_tt_dd=10_30835064740-1583844255125-466273; dc_session_id=10_1583844255125.696601; __gads=ID=23811027bd34da29:T=1583844256:S=ALNI_MY6f7VlmNJKxrkHd2WKUIBQ34Bbnw; UserName=xdc1812547560; UserInfo=708aa833b2064ba9bb8ab0be63866b58; UserToken=708aa833b2064ba9bb8ab0be63866b58; UserNick=xdc1812547560; AU=F85; UN=xdc1812547560; BT=1590317415705; p_uid=U000000; Hm_ct_6bcd52f51e9b3dce32bec4a3997715ac=6525*1*10_30835064740-1583844255125-466273!5744*1*xdc1812547560; Hm_up_6bcd52f51e9b3dce32bec4a3997715ac=%7B%22islogin%22%3A%7B%22value%22%3A%221%22%2C%22scope%22%3A1%7D%2C%22isonline%22%3A%7B%22value%22%3A%221%22%2C%22scope%22%3A1%7D%2C%22isvip%22%3A%7B%22value%22%3A%220%22%2C%22scope%22%3A1%7D%2C%22uid_%22%3A%7B%22value%22%3A%22xdc1812547560%22%2C%22scope%22%3A1%7D%7D; log_Id_click=1; Hm_lvt_feacd7cde2017fd3b499802fc6a6dbb4=1595575203; Hm_up_feacd7cde2017fd3b499802fc6a6dbb4=%7B%22islogin%22%3A%7B%22value%22%3A%221%22%2C%22scope%22%3A1%7D%2C%22isonline%22%3A%7B%22value%22%3A%221%22%2C%22scope%22%3A1%7D%2C%22isvip%22%3A%7B%22value%22%3A%220%22%2C%22scope%22%3A1%7D%2C%22uid_%22%3A%7B%22value%22%3A%22xdc1812547560%22%2C%22scope%22%3A1%7D%7D; Hm_ct_feacd7cde2017fd3b499802fc6a6dbb4=5744*1*xdc1812547560!6525*1*10_30835064740-1583844255125-466273; Hm_up_facf15707d34a73694bf5c0d571a4a72=%7B%22islogin%22%3A%7B%22value%22%3A%221%22%2C%22scope%22%3A1%7D%2C%22isonline%22%3A%7B%22value%22%3A%221%22%2C%22scope%22%3A1%7D%2C%22isvip%22%3A%7B%22value%22%3A%220%22%2C%22scope%22%3A1%7D%2C%22uid_%22%3A%7B%22value%22%3A%22xdc1812547560%22%2C%22scope%22%3A1%7D%7D; Hm_ct_facf15707d34a73694bf5c0d571a4a72=5744*1*xdc1812547560!6525*1*10_30835064740-1583844255125-466273; announcement=%257B%2522isLogin%2522%253Atrue%252C%2522announcementUrl%2522%253A%2522https%253A%252F%252Flive.csdn.net%252Froom%252Fyzkskaka%252Fats4dBdZ%253Futm_source%253D908346557%2522%252C%2522announcementCount%2522%253A0%257D; Hm_lvt_facf15707d34a73694bf5c0d571a4a72=1596946584,1597134917,1597155835,1597206739; searchHistoryArray=%255B%2522%25E8%258F%259C%25E9%25B8%259FIT%25E5%25A5%25B3%2522%252C%2522%25E5%25AE%25A2%25E6%259C%258D%2522%255D; log_Id_pv=7; log_Id_view=8; dc_sid=c0efd34d6da090a1fccd033091e0dc53; TY_SESSION_ID=7d77f76f-a4b1-43ef-9bb5-0aebee8ee475; c_ref=https%3A//www.baidu.com/link; c_first_ref=www.baidu.com; c_first_page=https%3A//www.csdn.net/; Hm_lvt_6bcd52f51e9b3dce32bec4a3997715ac=1597245305,1597254589,1597290418,1597378513; c_segment=1; dc_tos=qf1jz2; Hm_lpvt_6bcd52f51e9b3dce32bec4a3997715ac=1597387359’}url = ’https://www.csdn.net/’reponse = requests.get(url,headers=header)#打印文本形式print(reponse.text)session

session :通過在服務端記錄的信息確定⽤戶身份這⾥這個session就是⼀個指 的是會話會話對象是一種高級的用法,可以跨請求保持某些參數,比如在同一個Session實例之間保存Cookie,像瀏覽器一樣,我們并不需要每次請求Cookie,Session會自動在后續的請求中添加獲取的Cookie,這種處理方式在同一站點連續請求中特別方便

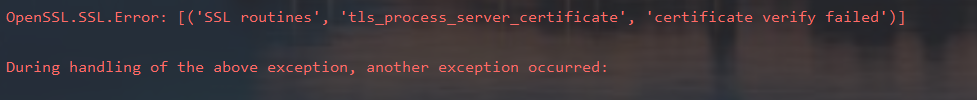

處理不信任的SSL證書什么是SSL證書?SSL證書是數字證書的⼀種,類似于駕駛證、護照和營業執照的電⼦副本。

因為配置在服務器上,也稱為SSL服務器證書。SSL 證書就是遵守 SSL協 議,由受信任的數字證書頒發機構CA,在驗證服務器身份后頒發,具有服務 器身份驗證和數據傳輸加密功能我們來爬一個證書不太合格的網站

import requestsurl = ’https://inv-veri.chinatax.gov.cn/’resp = requests.get(url)print(resp.text)

它報了一個錯

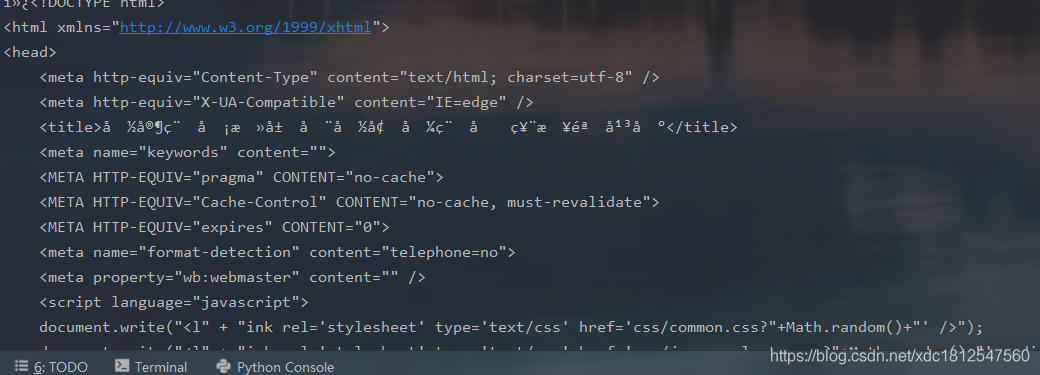

我們來修改一下代碼

import requestsurl = ’https://inv-veri.chinatax.gov.cn/’resp = requests.get(url,verify = False)print(resp.text)

我們的代碼又能成功爬取了

到此這篇關于python 爬蟲請求模塊requests的文章就介紹到這了,更多相關python 爬蟲requests模塊內容請搜索好吧啦網以前的文章或繼續瀏覽下面的相關文章希望大家以后多多支持好吧啦網!

相關文章:

網公網安備

網公網安備